Determining Information Flow Requirements

To determine the information flow of the organization, you need to include a number of factors, of which the main ones are listed below. Accessing data or information should be one of the main concerns when planning the network design:

- The data that needs to be accessed by users.

- The location of users.

- The time period when users need to access data.

- The method by which users access data.

A few typical questions which you should ask when determining information flow of the organization are:

- How is information passed from one point to another?

- What information is created within each department?

- What are documents utilized for?

- How are documents stored?

- Is information routed in the company and accessed by other users?

- Does the information need to be printed?

- Does information need to be published over the Internet?

- Are mainframes being utilized?

- Where are systems located?

- What servers are being utilized?

- Where are the locations of the servers?

- Is information being stored in a database?

- What databases currently exist?

- Where are the databases located?

- What is the main mechanism utilized for communicating between users? Is it only e-mail or are there other mechanisms as well.

- Are there any public folders utilized on Exchange servers?

- Does an intranet exist?

- Are any phone systems feeding into the network?

Understanding the Management Model

When planning a network infrastructure design, it is important to obtain the support of the different levels of management within the organization’s management model. Obtaining the support of users who are going to access information to perform their daily tasks is equally important as well.

The typical levels within an organization’s management hierarchy are listed here:

- President/Chief executive officer (CEO): This is the head of the organization. The president is usually the owner of a company that is privately owned. A chief executive officer (CEO) on the other hand is a leader of a publicly owned company.

- Board of Directors: A board of directors manages the operations of the organization or company. The board of directors could include the following roles:

- A chairperson that is the head of the board of directors.

- A financial officer

- A chief technical officer

- A secretary.

- Various other stakeholders

- Executive/Senior Management: Executive or senior management is made up of the following roles:

- President

- Senior vice presidents

- Vice presidents

- CEO

- Board of Directors

- Middle management: Middle management is made up of the following roles:

- Directors

- Managers

- All other roles: This includes department heads, team leaders, and then finally staff or users.

When you are determining the management model being utilized in the company, you should note the following:

- Draw a non-complicated organizational chart which details the different individuals of the management model together with the position each individual holds.

- Determine which services are outsourced, and then note the following:

- The name of the service being outsourced.

- The vendor for the service

- The cost associated with the service.

- Determine who the individual(s) are that are accountable for the IT budget.

- Determine how changes for the network are made, and who makes decisions on this aspect.

Analyzing Organizational Structures (Existing/planned)

You have to determine the organizational structure of a company, and then understand it, if you want to design a network infrastructure that meets the requirements of the company. A good starting point is to determine the higher level segments or departments of the company, and then determine how each department interrelates with the other segments.

The main issues which should be clarified when you analyzing planned or existing organizational structures are listed here:

- The manner in which the management organization is defined

- The way in which the organization is logically defined.

Only after you have determined the organizational structure of the company would you be able to determine the following:

- Whether the management model is flat or not.

- Whether the organizational structure is centralized or decentralized.

- The number of levels, and different levels within the organization.

Defining Management Requirements (Priorities)

To identify the priorities of the company, ask the following questions:

- What is the mission of the company? This is usually evident on the company’s intranet and newsletter, and in the annual report.

- What aspects or elements are considered important?

- What are the priorities that are specified or enforced in company meetings?

The main company or management issues which should be included and addressed when you plan a network infrastructure for the company are listed here:

- Network availability: Network availability becomes more important as the expectation increases for the network to be always accessible. To ensure availability, mission critical applications should be located on systems that have high availability features. You should include planning for high network availability as a component of your network design planning.

The technologies provided by Windows Server 2003 which can be used to provide high availability are:

-

- Microsoft Cluster Service; enables organizations to increase server availability for mission critical resources by grouping multiple physical servers into a cluster. A cluster can be defined as the grouping of two or multiple physical servers that are portrayed as, and operate as one network server. Servers in the cluster are referred to as nodes, while services and applications are referred to as resources. This clustering technology provides redundancy for network failures because another node is the cluster resumes the services of the failed server. This increases server availability for mission critical applications and network services. Application response time can be improved by dispersing applications across multiple servers.

- Network Load Balancing (NLB) Service; a clustering technology that provides high availability and scalability. NLB is typically utilized to assign Web requests between a cluster of Internet server applications. NLB reroutes any requests that are sent to a failed NLB cluster server. With NLB, client requests are load balanced according to the configured load balancing parameters. Servers in the NLB cluster can therefore be configured to share the processing load of client requests. To ensure high performance, NLB uses a distributed filtering algorithm to match incoming client requests to the NLB servers in the cluster when making load balancing decisions.

- Fault tolerance: A fault tolerant system is a system that is set up in such a manner that it can continue to operate when certain system components experience failures. Fault tolerance pertains to the use of hardware and software technologies to prevent data loss in the occurrence of a failure such as a system or hardware failure. Setting up a fault tolerant system becomes vital when you have servers running mission critical applications. Redundant components, paths and services can be included in the network topology to steer clear of single points of failure, and to ensure a highly available topology. To protect data from drive failures and to add fault tolerance to your file systems, use RAID technology. Windows Server 2003 provides good fault-tolerant RAID systems. Windows Server 2003 also supports hardware based RAID solutions. You can implement fault tolerance as hardware based RAID, or software based RAID. Windows Server 2003 provides a software implementation of RAID to maintain data access when a single disk failure occurs. Data redundancy occurs when a computer writes data to more than one disk.

A few strategies which you can employ to ensure fault tolerance in your system are summarized below:

-

- To enable your servers to shut down properly when a power failure occurs, use an uninterruptible power supply (UPS).

- To ensure that no data is lost when a hard disk failure occurs, deploy one or multiple RAID arrays for both the system and data storage. This ensures that only the failed disk needs to be replaced when a disk failure occurs. RAID essentially adds fault tolerance to file systems, and increase data integrity and availability because it creates redundant copies of your data. RAID can be used to improve disk performance as well.

- To provide redundancy for SCSI controller failures, you should utilize multiple SCSI adapters.

- To provide redundancy for network card failures, utilize multiple network cards.

- To cater for server failures where a server holds mission critical data, or runs mission critical applications, utilize clusters to provide redundancy and failover.

- To design a fault tolerant file system, implement the Distributed file system (Dfs). Dfs is a single hierarchical file system that assists in organizing shared folders on multiple computers in the network. Dfs provides a single logical file system structure, and can also provide a fault-tolerant storage system. Dfs provides load balancing and fault tolerance features that in turn provide high availability of the file system and improved performance.

- Cost: This is probably one of the trickiest areas. While cutting costs is probably always the opted for strategy, remember that a network design that is incorrectly costed can lead to more problems further down the line.

- Scalability: When you design a scalable network, it means that your network will be able to expand to accommodate growth and enhancements. Scalability can refer to increasing either of the following system resources of your existing hardware:

- Processors

- Network adapters

- Memory

- Disk drives

You can utilize a clustering technology to deal with any scalability issues.

- Security: Ensuring that sensitive data is kept safe can be considered more important than ensuring optimal network performance. Security basically focuses on authentication, protecting data, and access control:

- Authentication deals with verifying an individuals identification before any resources are accessed. With Windows Server 2003, authentication is associated with interactive logon and network authentication.

- Data protection involves ensuring data confidentiality, and data integrity. You can use encryption algorithms and encryption keys to ensure the privacy of data. When encryption is implemented to protect data, you need a decryption key to decipher the data. How strong encryption is, is determined by the encryption algorithm used and the length of the encryption key. You can protect files on an NTFS volume by using the users’ Encrypting File System (EFS) public key and private key information.

- Digital signing is the mechanism that can be implemented to ensure data integrity. When a file is digitally signed, you can determine what was done within the file.

- Access control involves restricting data and resource access to users that are configured with the appropriate rights to access the resources/data. You implement access control by assigning rights to users, and then specifying permissions for objects.

- Auditing is yet another security feature that enables you to monitor events that can result in security breaches.

When you define the security policy of the organization, remember to base it on the security requirements and procedures of the company.

A few methods which you can include to implement physical security are detailed below:

-

- All servers should be secured in a locked server room. Only those individuals that need access should be permitted to access the server room using a key or security code.

- All hubs, routers and switches should be placed in a wiring closet, or in a locked cable room.

- You should use case locks on your servers. You can also install case locks on other systems that can be physically accessed.

- You should restrict access to the floppy drive as well

- Set a BIOS password on all systems. This would prevent an unauthorized person from accessing the BIOS.

A few methods which you can use to secure the operating system are listed here:

-

- Install antivirus software on all servers.

- Consider using the NTFS file system because it provides more security than the FAT file system.

- You should disable any services that are not required. Use the Services applet in Control panel to control which services will be running.

- You should also disable protocols that are not required.

- You should disable user accounts that are inactive, and delete those user accounts that are not required.

- Ensure that the password configured for the Administrator account is a strong password

- Define the password policy, Kerberos policy, account lockout policy, and audit policy in the domain security policy.

- Performance: The level of performance achieved with your network design directly affects whether users can efficiently perform their tasks, and whether the customer’s experience is a good one. A bottleneck is a state where one system resource prevents a different system resource from operating optimally. A bottleneck can occur in a Windows subsystem or on any component of the network, and can be the reason why system performance is slow.

Bottlenecks typically occur when:

-

- Insufficient system resources exist. A resource can be overused when insufficient system resources exist.

- Resources are not sharing workloads equally. This usually occurs when multiple instances of the identical resource are present.

- Application(s) could also be excessively using a certain system resource. When this occurs, you might need to upgrade or even replace the problematic application.

- A resource could be faulty or not functioning correctly. You might need to reconfigure, or even replace the resource.

- Incorrectly configured settings can also cause bottlenecks

System and server performance is typically impacted by the following:

-

- Resources are configured incorrectly which are in turn causing resources to be intensely utilized.

- Resources are unable to handle the load it is configured to deal with. In this case, it is usually necessary to upgrade the particular resource or add any additional components that would improve the capability of the resource.

- Resources that are malfunctioning impair performance.

- The workload is not configured to be evenly handled by multiple instances of the identical resource.

- Resources are ineffectually allocated to an application(s).

To optimize server performance, you can perform a number of tasks, such as:

-

- Reducing the load of network traffic on the particular server by implementing load balancing strategies.

- Reducing CPU usage

- Improving disk I/O

The subsystems which should be optimized to tune the server for application performance are:

-

- Memory subsystem

- Network subsystem

- Processor subsystem

- Processes subsystem

- Disk subsystem

Defining User Requirements (Priorities)

The network design which you create should provide the following:

- Enable users to perform their daily tasks or operations.

- Enable the network to scale to meet future growth.

There are a number of network services which users are dependent on to perform their daily operations. A few of the more widely utilized network services are listed here:

- E-mail services: A company can use either of these methods to make e-mail available to users:

- Implement an e-mail technology such as Microsoft Exchange so that the company can maintain its own e-mail infrastructure.

- Outsource its e-mail to an Internet Service Provider (ISP).

When designing an e-mail services solution for your network design, include the following important elements:

-

- Design a high performance and high availability e-mail services solution that meets the requirements of your users.

- The design should include fault tolerance as well.

- Task Management: Enabling task management features for users through Microsoft Outlook and Lotus Notes would provide the following benefits:

- Improve the efficiency of time management of the user.

- Automatic meeting scheduling.

- Automatic notifications of appointments.

- Tracking of meeting attendance.

- Team leaders can manage appointments and meetings for groups of users.

- Central data storage: A file server should be used to centralize the storage of shared data. Shared folders basically enable users to access folders over the network. Shared folder permissions are used to restrict access to a folder or file that is shared over the network. Folder sharing is normally used to grant remote users access to file and folders over the network. Web sharing is used to grant remote users access to files from the Web if Internet Information Services (IIS) is installed. To share folders on NT file system (NTFS) volumes, you have to minimally have the Read permission. NT file system (NTFS) partitions enable you to specify security for the file system after a user has logged on. NTFS permissions control the access users and groups have to files and folders on NTFS partitions. You can set an access level for each particular user to the folders and files hosted on NTFS partitions. You can allow access to the NTSF files and folders, or you can deny access to the NTFS files and folders. The NTFS file system also includes other features such as encryption, disk quotas, file compression, mounted drives, NTFS change journal, and multiple data streams. You can also store Macintosh files on NTFS partitions.

A few important factors which you should consider when you determine the file services for the network design are listed here:

-

- In large organizations, ensure that data located on multiple servers can be retrieved from one access pint.

- You should ensure that users can access data from multiple locations (if applicable).

- For files that are frequently accessed and utilized, try to minimize delays.

- Your design should include the capability of moving data between different servers without affecting the manner in which your users access information.

A few new features and enhancements included with Windows Server 2003 that are specific to the file sharing services are listed here:

-

- NTFS permissions: Microsoft has since made changes to NTFS in Windows Server 2003 to support features such as shadow copies, disk quotas, and file encryption. The main feature of the NTFS file system is that you can defines local security for files and folders stored on NTFS partitions. You can specify access permissions on files and folders which control which users can access the NTFS files and folders. You can also specify what level of security is allowed for users or group. NTFS enables you to specify more precise permissions that what share permissions enable. You can specify NTFS permissions at the file level and the folder level. By default, permissions of NTFS volumes are inheritable. What this means is that files and subfolders inherit permissions from their associated parent folder. You can however, configure files and subfolders not to inherit permissions from their parent folder.

- Volume Shadow Copy: Volume shadow copies is a new Windows Server 2003 feature that can be used to create copies of files at a specific point in time or at a set time interval. Shadow copies can only be created on NTFS volumes to create automatic backups of files or data per volume. When enabled, the Shadow copies feature protects you from accidentally losing important files in a network share. If volume shadow copies are enabled for shared folders, you can restore or recover files which have been accidentally deleted or which have become corrupt. The prior versions of files can be copied to the same location, or to another location. Volume shadow copies also enable you to compare changes between a current version of the file and a previous version of the file.

- Distributed File Service (DFS): The Distributed File System (Dfs) is single hierarchical file system that assists in organizing shared folders on multiple computers in the network. Dfs provides a single logical file system structure by concealing the underlying file share structure within a virtual folder structure. Users only see a single file structure even though there are multiple folders located on different file servers within the organization. Dfs provides a single point of access to network files within the organization. Users are able to easily locate and access the files and file shares. Dfs provides load balancing and fault tolerance features that in turn lead to high availability of the file system, and improved performance. Administrators can also install Dfs as a cluster service to provide improved reliability.

- Disk Quotas: Through disk quotas, you can manage disk space utilization of your users for critical NTFS volumes. Disk quotas are used to track disk space usage on a per user, per NTFS volume basis. When users’ disk usage is tracked, a user is tracked on the actual files which the particular user owns. The location of the file is irrelevant because space usage works on file ownership. Disk quotas can therefore control and monitor disk space usage for NTFS volumes.

- Offline Files: Offline Files can be considered a client-side improvement that was introduced with Window 2000. The Offline File feature makes it possible for network files and folders to be accessed using the local disk at times when the network is not available. Offline Files also make is possible for a user to mirror server files to a local laptop, and ensures that the laptop files and server files are in sync. For your laptop uses, Offline Files ensures that the user can access the server based files when they are not connected to the network. As soon as the mobile user reconnects to the network, any changes that were made to the laptop files are synchronized with the server based files. Synchronization between laptop files and server based files is a simple and transparent process. Although Offline Files is considered a stand-alone technology, you can pair it with Folder Redirection and configure network shares for higher data reliability. The default configuration is that folders which are redirected are also automatically available offline. This includes all subfolders within the redirected folder. You can change this default setting by enabling the following Group Policy setting: Do not automatically make redirected folders available offline. The Offline Files feature’s settings can be controlled by Group Policy in Active Directory domains.

- Encrypted File System (EFS): Encrypting File System (EFS) enables users to encrypt files and folders, and entire data drives on NTFS formatted volumes. Even when an unauthorized person manages to access the files and folders because of incorrectly configured NTFS permissions, the files and folders would be encrypted! EFS secures confidential corporate data from unauthorized access. EFS utilizes industry standard algorithms and public key cryptography to ensure strong encryption. The files that are encrypted are therefore always confidential. EFS in Windows Server 2003 further improves on the capabilities of EFS in Windows 2000. Users that are utilizing EFS can share encrypted files with other users on file shares and even Web folders. You can configure EFS features through Group Policy and command-line tools. EFS is well suited for securing sensitive data on portable computers. It also works well for securing data when computers are shared by multiple users.

- Removable storage:Windows Server 2003 includes support for the following removable storage devices:

- Universal Serial Bus (USB) storage devices

- Zip drives

- FireWire devices

- Indexing Service:The Windows Server 2003 Indexing Service includes the following indexing capabilities:

- Index the content of a server

- Index the content of a workstation hard drive

- Index the metadata of document files by location, name, author, timestamp.

- Application services: A few benefits of using a centralized application management approach are listed here:

- Application data which is centrally stored means that users can access files from any location over the network.

- You can enable offline access to network applications so that users can be disconnected from the network and still be able to perform their daily tasks.

- You can deploy and upgrade applications without any user intervention.

The Terminal Services service in Windows Server 2003 supports Remote Desktop for Administration, Remote Assistance (RA), and the Terminal Server Mode. Terminal Server mode enables administrators to deploy and manage applications from a central location. With Terminal Services, an administrator no longer needs to install a full version of the Microsoft Windows client OS on each Desktop. You can simply deploy Terminal Services. Similarly, when applications need to be installed or updated, a single instance of the application can be installed or updated on the Terminal Services server. Users will have access to the application without you needing to install or update the application on all machines.

- Print Services: All prior versions of Windows, namely Windows NT and Windows 2000 as well as Windows Server 2003, support locally connected printers and network connected printers. Locally connected printers are attaced to a physical port on the print server, while network connected printers are attached to the network. With network connected printers, the print servers communicate with the printer through a network protocol. Printers are portrayed on the print server as logical printers. The logical printer contains the properties of the printer, including the printer driver, printer configuration settings, print setting defaults and other properties. Printers are generally configured as a three component model. The model contains the physical printer, a logical printer stored on the printer server, and the clients connected to the logical printer.

The advantages of printing through a printer server are listed here:

-

- The printer server bears the load of printing and not the client computers

- Management functions like auditing and monitoring can be centralized

- All network users are aware of the status of the printer.

- The logical printer manages printer settings and printer drivers.

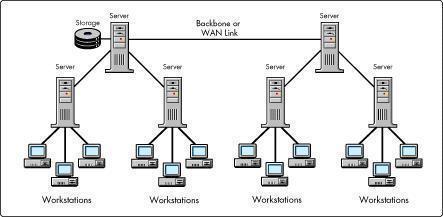

Analyzing the Existing Network Environment

After the organizational structure and management model of the company is determined, the following step is to asses the existing network infrastructure.

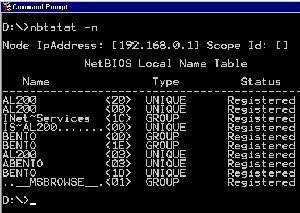

You need to start collecting information on the existing network equipment or hardware within the organization. Factors to include or determine are listed here:

- Determine whether the LAN is divided into a number of subnets. For each subnet, you would need to determine the following additional information:

- Network ID and subnet mask.

- Host IDs assigned to hosts on the subnet.

- Default gateway assigned to hosts on the subnet.

- Determine whether the subnet has a DHCP server.

- Does the DHCP server assign DNS server addresses as well?

- Does the subnet utilize a DHCP relay agent?

- Determine the location of routing devices. Here, clarify the role of the routers:

- Do the routers connect the LAN to the WAN?

- Do the routers connect the subnets on the LAN?

You also need to determine the following information on each routing device:

-

- The make and model of the router.

- Type of routing provided by the router

- For Windows routers, determine any additional services running on the router.

- Determine the version of software.

- Determine where patch panels or cables are located.

- Determine the type of cabling being used.

- Determine the location of all remote access type devices.

The following step when you analyze the existing network infrastructure is to determine which systems exist, when the system is mostly utilized.

When you record information on the existing computers on the network, you should include the following information:

- The name of the computer, the location of the computer, the brand of the computer, and the computer’s model.

- The existing IP configuration of the computer.

- The motherboard’s brand and model.

- The processor type and processor speed.

- The memory type and quantity of memory.

- For network adapters; record the type and brand, and the connections and speed supported by the adapter.

- For hard drives and hard drive controllers; record the type, brand, and size.

- For attached peripherals; record the brand and model.

- Determine which services are running on servers and workstations.

- Determine the printers that are set up on the system.

- Determine which shared folders are set up on the system.

- Determine which software is installed. Record the name and version of the software installed, an any upgrades which have been deployed.

- Determine which users are allowed to access the system, and list the level of system access users have.

Analyzing an Existing Windows 2000 Active Directory Structure

When there is already an existing directory structure for the network design which you are defining, then you have to analyze and document the existing directory structure as well. From this exercise, you may find that the existing directory structure only needs to be minimally changed to meet the requirements of the new network design.

The obvious starting point would be to analyze the existing domain structure. Your end result should be a diagram(s) indicating the following:

- All existing domains

- How domains are arranged into:

- Forests

- Domain trees

The typical components that you have to include in your diagram depicting the existing domain structure and how domains link between each other, are listed here:

- Full domain name of each domain.

- Show which domain is the root of the domain tree.

- Show which domain is the root the forest.

- Show forest trust relationships

- Show how links to other forests are defined.

- Show existing shortcut trust relationships.

- Show one-way trust relationships that link Windows NT 4.0 domains

The second step in analyzing the existing domain structure is to determine and record the existing organizational unit (OU) structure. You should draw a diagram for each existing domain that defines its underlying organizational unit (OU) structure.

The information you collect on the existing OU structure should include the following:

- For each OU, the Active Directory objects and other OUs stored within it.

- Group Policy Objects (GPOs) linked to the particular OU.

- Permissions on the actual OU.

- Permissions on the objects contained within the OU.

- Specify whether objects within the OU inherit permissions.

The third step in analyzing the existing domain structure is to determine information on each domain in relation to sites and domain controllers:

- Determine how each domain is arranged into sites

- Determine which domain controllers exist in the sites

- Determine how domain controllers are arranged in each site

The information you should collect on your domain controllers are noted below:

- Indicate whether the server is only a domain controller.

- Indicate which member servers provide the following services:

- DHCP services

- DNS services

- Web services

- Mail services

- Indicate whether the server is configured for either of the following operations master roles:

- Schema Master role: The domain controller assigned the Schema Master role controls which objects are added, changed, or removed from the schema. This domain controller is the only domain controller in the entire Active Directory forest that can perform any changes to the schema.

- Domain Naming Master role: The domain controller assigned the Domain Naming Master role is responsible for tracking all the domains within the entire Active Directory forest to ensure that duplicate domain names are not created. The domain controller with the Domain Naming Master role is accessed when new domains are created for a tree or forest. This ensures that domains are not simultaneously created within the forest.

- Relative identifier (RID) Master role: The domain controller assigned the RID Master role is responsible for tracking and assigning unique relative IDs to domain controllers whenever new objects are created.

- PDC Emulator role: In domains that contain Windows NT BackupDomain Controllers (BDCs), the domain controller assigned the PDC Emulator role functions as the Windows NT Primary Domain Controller (PDC). The PDC Emulator enables down-level operating systems to co-exist in Windows 2000 and Windows Server 2003 Active Directory environments.

- Infrastructure Master role: The domain controller assigned the Infrastructure Master role updates the group-to-user references when the members of groups are changed and deletes any stale or invalid group-to-user references within the domain.

- Indicate whether the server is a Global Catalog server: The Global Catalog (GC) serves as the central information store of the Active Directory objects located in domains and forests. The Global Catalog server is the domain controller that stores a full copy of all objects in its host domain. It also stores a partial copy of all objects in all other domains within the forest. The functions of the GC server can be summarized as follows:

- The GC server resolve user principal names (UPNs) when the domain controller handling the authentication request is unable to authenticate the user account because the user account exists in another domain.

- The GC server deals with all search requests of users searching for information in Active Directory.

- The GC server also makes it possible for users to provide Universal Group membership information to the domain controller for network logon requests.

- Indicate whether the server is bridgehead server that replicates Active Directory information to any other sites.

Analyzing an Existing Windows NT 4.0 Infrastructure

When there is already an existing Windows NT 4.0 infrastructure for the network design which you are defining, then you have to analyze and document this existing infrastructure as well. Here, you will find that a domain model exists, but there will be no directory service deployed. Windows NT 4.0 infrastructures utilize primary domain controllers (PDCs) and backup domain controllers (BDCs).

The primary domain controller (PDC) holds the centralized database that contains the security information to manage users and resources. This is how domains are put into operation in Windows NT network environments. The centralized database or accounts database is replicated to the backup domain controllers (BDCs) to ensure reliability. The master copy of the database however only resides on the PDC. Any changes made on the PDC and are replicated to the BDCs. Network resources such as network printers and files were typically located in resource domains. Resource domains have their own PDC and BDCs.

If an existing Windows NT 4.0 infrastructure is in place, you have a number of methods to choose from to implement you new network design requirements:

- Upgrade the network and keep the domain structure

- Change the entire existing Windows NT 4.0 infrastructure

A few benefits of retaining the existing Windows NT domain structure are listed here:

- You can upgrade your existing domain objects to Active Directory.

- Existing security policies are retained

- Existing users’ passwords and profiles are retained.

When analyzing and recording information on an existing Windows NT 4.0 domain structure, include the following information:

- Domain name

- Servers within the domain: Here, identify each server by IP address and server name. List whether the server is a database server or file server, and identify which of the following services are provided by the servers:

- DHCP services

- DNS services

- Internet Information Services (IIS), services

- Routing And Remote Access Services (RRAS)

- Domain controllers within the domain

- List the resources of the doman.

- List the users of the domain.

- Any trusts between your domains.

Nico

Author, what do you mean by the statement “Consider using the NTFS file system because it provides more security than the FAT file system.”? — some readers might blindly follow the advice without giving it a thought. What do you mean by “it provdes more security”. Please define security in this context.